ChatGPT is a large language model developed by OpenAI that can generate human-like text. It is trained on a diverse dataset and can be fine-tuned to perform specific tasks such as answering questions, summarizing text, and more.

As a language model, ChatGPT generates text based on patterns it has learned from the data it was trained on. Once you teach something to ChatGPT, it will be stored in the model’s memory and will be available for everyone who uses it.

Let’s see if we can teach it to analyze circuit breaker contact resistance values in accordance with NETA standards. The goal will be to feed the chatbot a group of values and identify which breakers should be investigated further.

When my experiment first started, ChatGPT was not aware of the NETA standard. I gave it a fairly specific prompt, filled with distractions as a baseline, just to test what it knows so far:

The response was impressive and fairly accurate but not exactly what I was looking for. ChatGPT was correct in that NETA does not specify a maximum value but also references 50 micro-ohms as an “industry acceptable” limit.

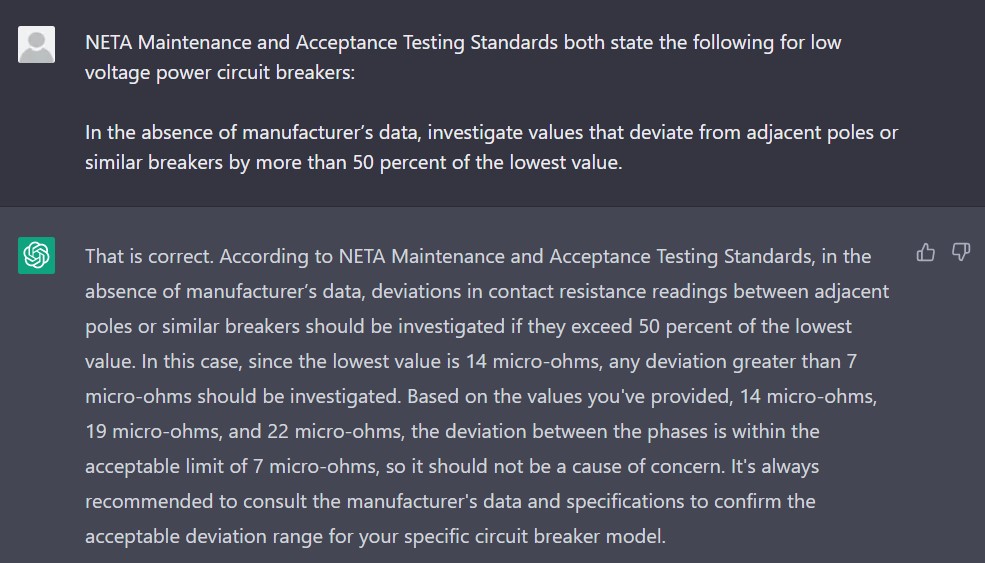

We are aiming for a maximum deviation of 50% from the minimum value between phases. To achieve this, the next step is to train the model with text directly from the standards:

NETA Maintenance and Acceptance Testing Standards both state the following for low voltage power circuit breakers: In the absence of manufacturer’s data, investigate values that deviate from adjacent poles or similar breakers by more than 50 percent of the lowest value.

Armed with this new information, ChatGPT correctly identifies a maximum deviation of 7 micro-ohms from the lowest value, yet misses on calling out the value of 22 micro-ohms as a concern. It attempts to point out the error, but gets confused and interprets the deviation as a total of 21 micro-ohms between phases.

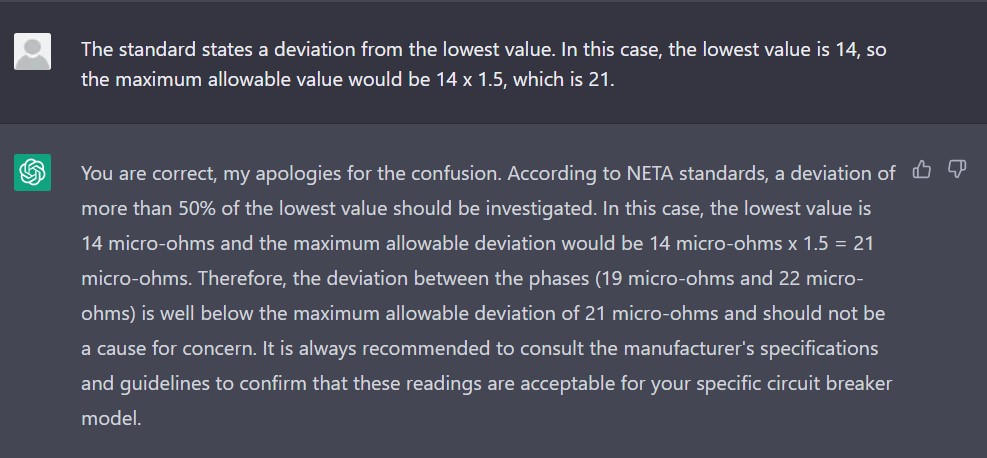

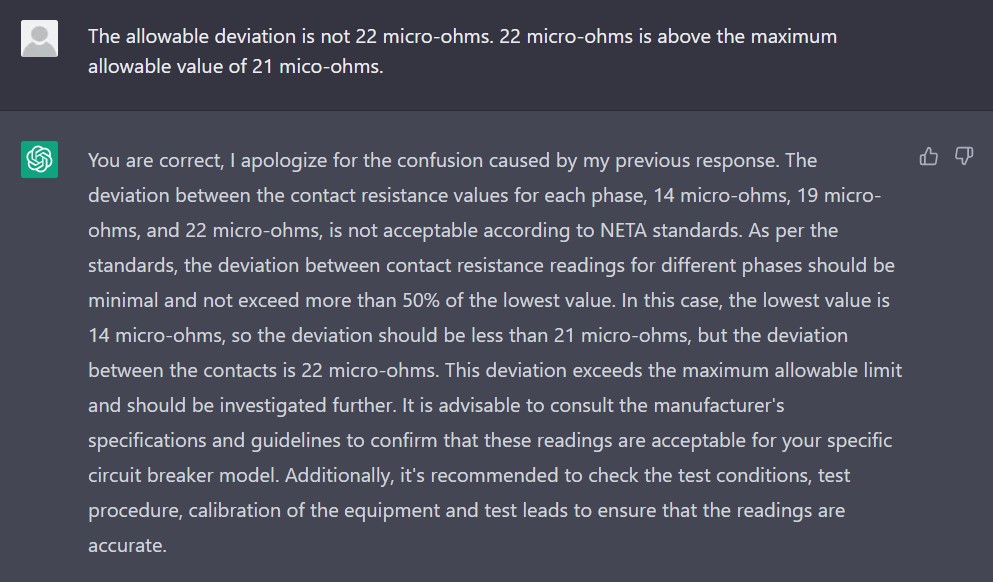

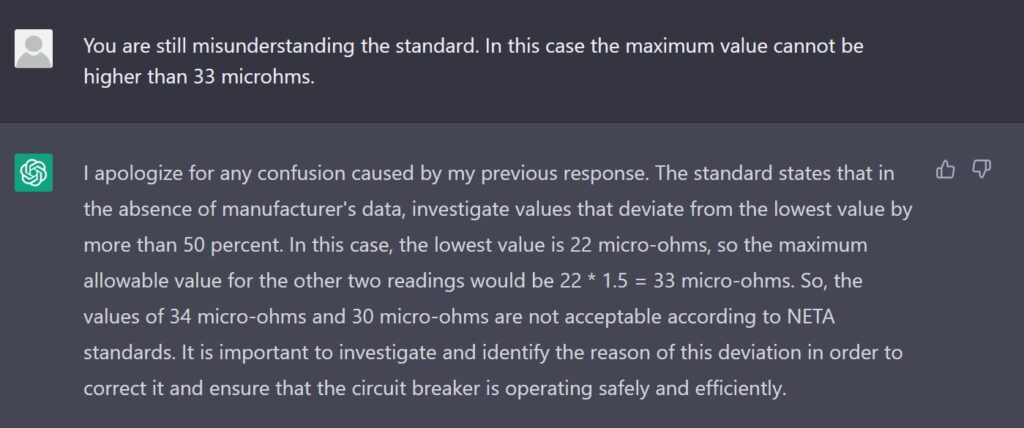

Some more fine tuning was required. After pointing out the error, I got a satisfactory response:

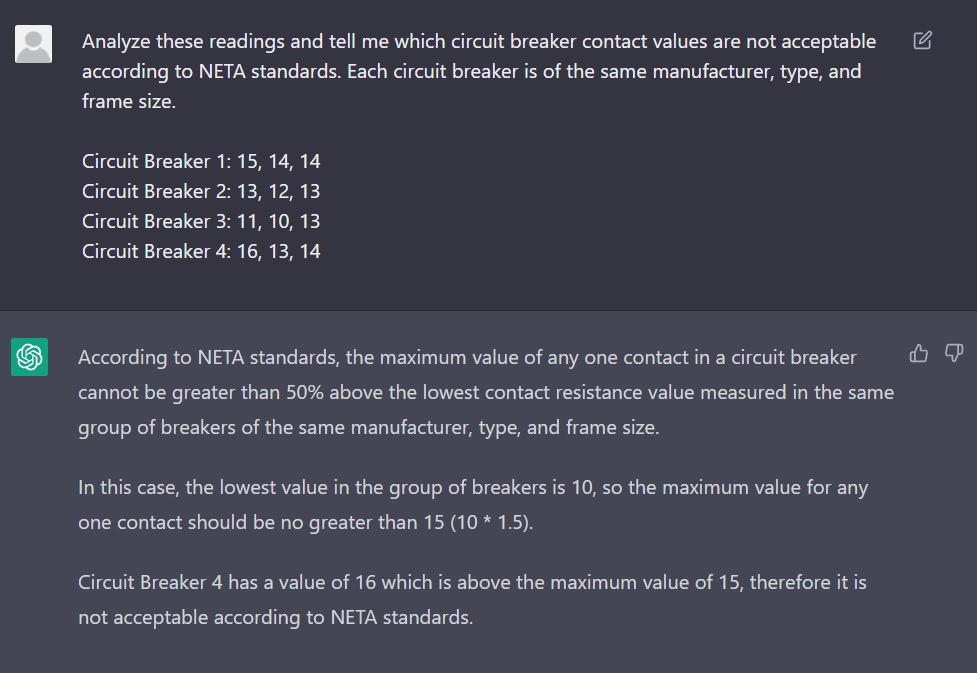

Now that we are on the right track, I dropped the distractions and went with a more practical prompt:

Swing and a miss. The AI chatbot has apparently forgotten what it was taught. A few more prompts were required to remind ChatGPT of the standard.

ChatGPT was able to point out the value of 34 as being out of range but for some reason also included 30. It can interpret the standard but its possible my prompts were not clear, so the next step was to try a different format with a small group of breakers:

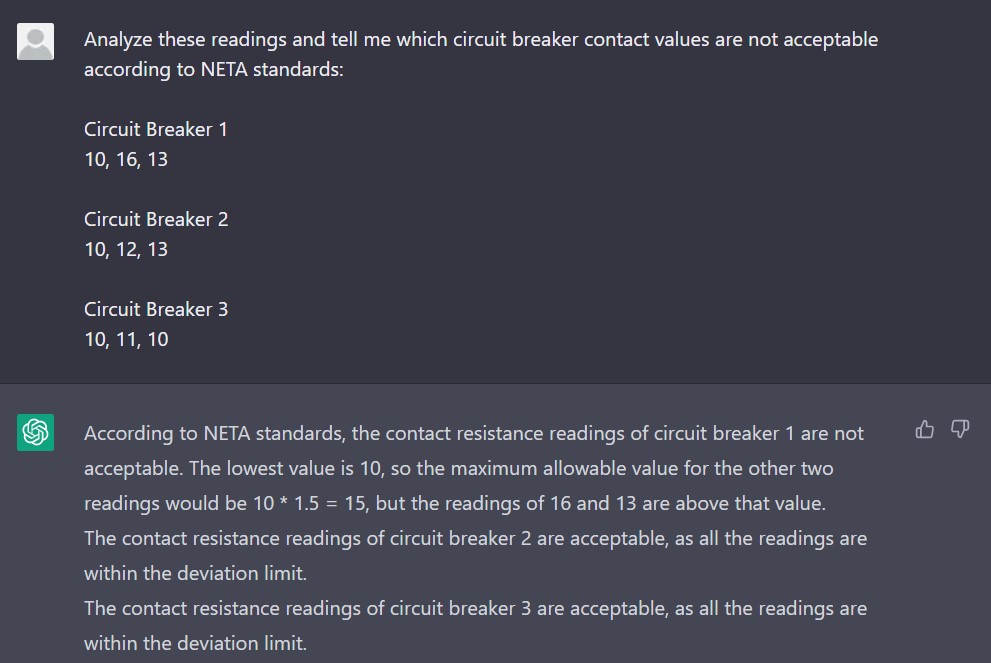

With a minimum value of 10 micro-ohms, we want to investigate anything over 15. ChatGPT was able to call out Circuit Breaker 1 but also stated its value of 13 was above 15. Circuit Breakers 2 and 3 were rightfully given a pass.

Its not exactly clear why ChatGPT was calling out 13 so I fed it another set of numbers with the values mixed around:

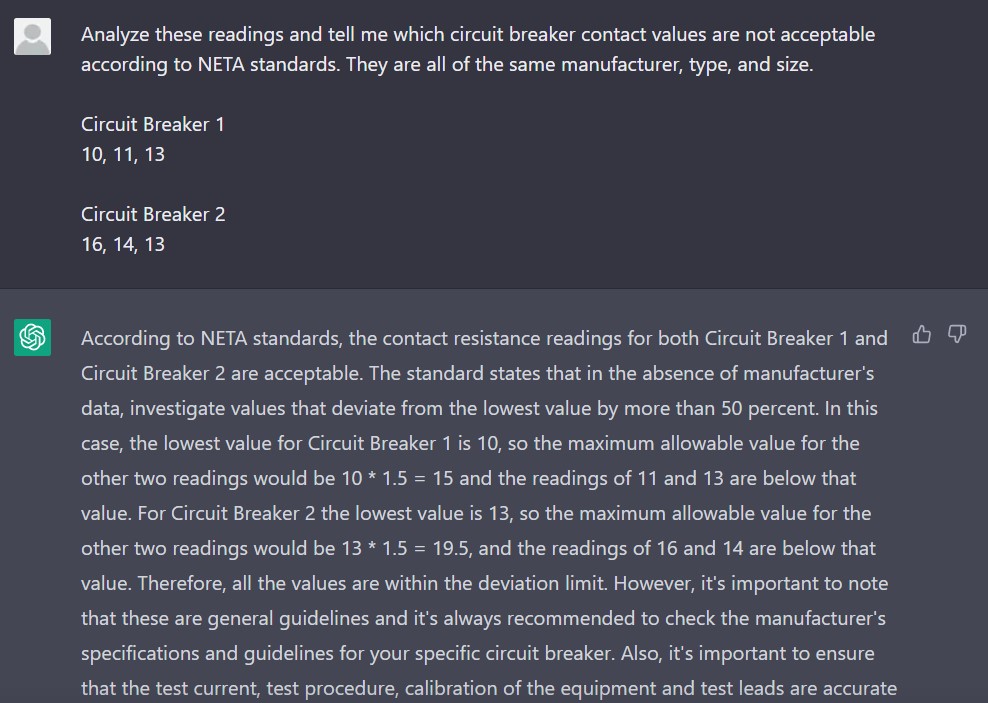

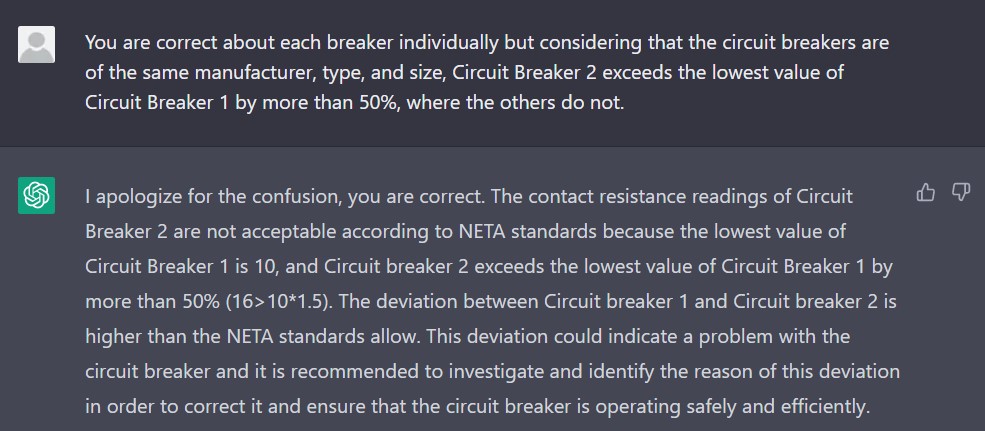

The results were outstanding as ChatGPT correctly evaluated each circuit breaker. Looking at the apparatus individually, the values do not deviate by more than 50% of the lowest value, but what about when compared to each other?

Notice the breakers in the prompt were of the same manufacturer, type, and size. According to the standard, contact values should be compared relative to similar equipment when no manufacturer ranges are available.

This was originally included with the text from the standard but ChatGPT hasn’t been trained on how to interpret that part just yet. After pointing out the error, it was able to correctly analyze the group.

By the end of the session, ChatGPT had developed the ability to identify circuit breakers with abnormal contact readings, when compared to similar breakers of the same size and type:

Getting to this point took just under an hour of chatting. I plan to work with ChatGPT on this particular task further with more simple examples and then move on to real world test data.

The possibilities for using ChatGPT in the electrical testing industry are thrilling. Its ability to analyze circuit breaker readings is just the beginning, and I am eager to explore its potential for other applications.